My honest advice for learning programming and machine learning in 2026.

Introduction

I am not a programmer supremacist.

I don’t believe code is magic.

I don’t think learning Python will save your soul.

But I am increasingly convinced that most people trying to “learn AI” are starting at exactly the wrong place and are paying for it with years of confusion, cargo-cult knowledge, and false confidence.

People open a reinforcement learning paper.

They watch a Karpathy talk.

They paste something into ChatGPT.

And then they realize that they don’t actually know what’s happening.

This is the prequel.

Start here if:

you don’t know how to program

you don’t know machine learning

or you started reading the main article and felt that sinking “I should understand this but I don’t” feeling

If that’s you, good.

You’re early enough to avoid years of unlearning.

The First Lie: “I Know How to Code”

Having a PhD in AI does not mean you can program.

Having access to an LLM does not mean you can program.

Believing either of those is usually a negative signal.

Programming is harder than AI.

Not because it’s more complex but because it’s less forgiving.

Most researchers are bad programmers.

Most great programmers become competent researchers surprisingly fast.

I learned this the wrong way around.

Programming looks simple because it is simple.

But simple does not mean easy.

The real difficulty is that programming is almost always taught in a way that rewards cleverness instead of correctness. You’re trained to over-abstract, over-generalize, and over-engineer long before you understand the problem you’re trying to solve.

Unlearning this takes years.

If you’re brand new, you’re lucky. You don’t yet have bad instincts.

If you’re not new, you need to make a decision right now:

that your entire mental model of “good code” might be wrong.

Not flawed.

Wrong.

What Programming Actually Is

Programming is writing instructions that operate on data.

That’s it.

The instruction set is embarrassingly small:

assign data to variables

evaluate conditions

loop

group instructions into functions

You can learn the tools in a weekend.

You will spend a lifetime learning how to use them well.

This is why programming skill is not linear.

a bad programmer creates problems

a good programmer solves problems

a great programmer removes entire classes of problems

The distance between those is not talent.

It’s taste.

Enough philosophy.

Here’s how to actually learn.

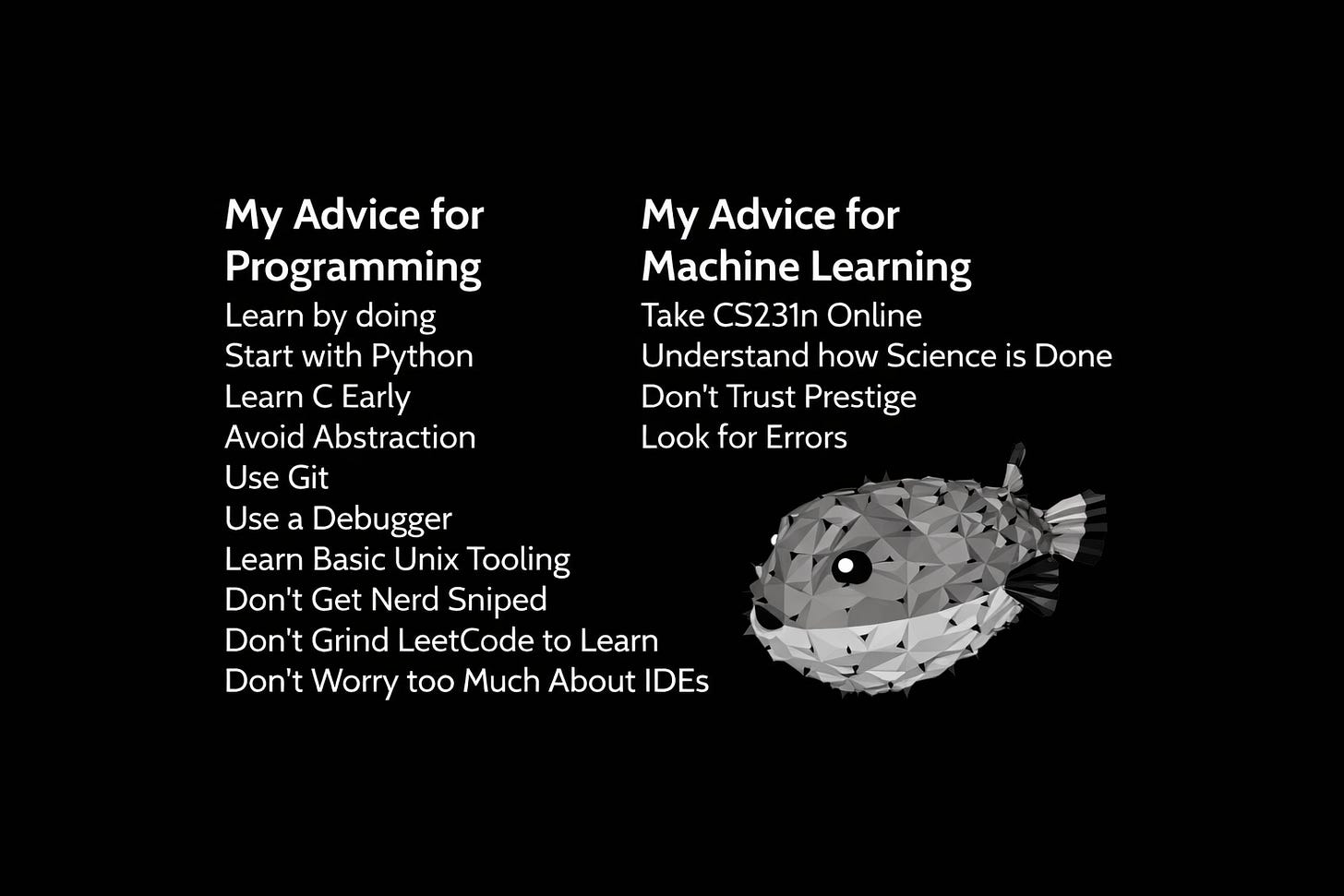

Learn Programming Like an Adult

1. Learn by Doing, Immediately

Start with something that takes hours.

Then days.

Games are excellent because failure is visible. The screen goes black. The character doesn’t move. The bug humiliates you in public.

Use raylib. It’s boring in the best possible way. Lightweight, opinionated, and written by people who clearly care about simplicity.

If a tool feels exciting, it’s probably stealing your attention.

2. Start With Python (But Don’t Linger)

Yes, many experienced developers hate Python.

Yes, they’re often right.

Start with it anyway.

Python removes friction so you can focus on logic instead of ceremony. Almost every AI system you’ll touch has Python at the top layer.

But here’s the important part: don’t stay.

Python’s design nudges you toward laziness if you’re not careful. Avoid:

heavy external packages

inheritance hierarchies

decorators

clever tricks

Force yourself to express everything as:

assignments

conditions

loops

functions

Build a few small things.

Then leave.

3. Learn C Early (Not First)

C is honest.

Painfully honest.

It forces you to confront what the machine is actually doing instead of hiding it behind abstractions.

You should understand:

types and casts

memory allocation

stack vs heap

pointers

compilation and linking

This is why I don’t recommend C first. It demands too much context at once.

But learning it early rewires your brain permanently.

Avoid C++ for now. It’s C plus decades of accumulated mistakes.

4. Avoid Abstraction Like the Plague

Solve the problem you have.

Not the problem you’re fantasizing about.

Abstraction is seductive because it feels like intelligence. In reality, it’s often fear disguised as foresight.

C helps because it removes temptation.

No inheritance.

No magic.

Just you and the problem.

5. Use Git or Lose Your Mind

Make GitHub repos by default.

Commit often.

This is how you:

don’t lose work

understand your own mistakes

stop being afraid of breaking things

Version history is psychological safety.

6. Use a Debugger

Print statements are for emergencies.

A debugger lets you:

pause time

inspect reality

stop guessing

Use pdb for Python.gdb + address sanitizers for C.

7. Learn Basic Unix Tooling

The terminal is not optional.

You need maybe ten commands:

ls, cd, cat, mv, cp, mkdir, top, pwd, head

Linux is best.

macOS is fine.

Windows requires WSL.

Do not obsess over customization. You’re learning to think, not to decorate your environment.

8. Don’t Get Nerd-Sniped

Avoid:

dogmatic OOP and FP

test-driven development as religion

language hopping

distro hopping

Python type hints

build systems rabbit holes

modern web frameworks

“best practices” discourse

the new hot thing on X

9. Don’t Learn Programming via LeetCode

LeetCode trains interview performance, not programming.

Yes, learn basic data structures.

No, mastering dynamic programming won’t make you effective.

Projects matter.

Shipping matters.

10. IDEs Don’t Matter

Your editor is not your skill.

Use something boring.

Run code from the terminal.

Avoid AI-first editors until you can think without them.

Autocomplete is fine.

How to Learn Machine Learning (After You Can Code)

Becoming a competent researcher is easier than becoming a good programmer.

This is why most researchers are terrible programmers.

If you reverse the order, everything improves.

Unlike programming, ML education is actually decent.

Do CS231n.

Watch Karpathy or Johnson.

Do the problem sets.

Yes, all of them.

You need:

matrices

partial derivatives

That’s it.

Once you finish, you’ll understand:

backprop

autograd

PyTorch

Reading Papers Without Losing Your Sanity

Start reading papers.

You will feel stupid.

That’s normal.

Most papers are written under constraints you need to understand:

page limits

reviewer incentives

GPU scarcity

deadline panic

This explains:

unnecessary math

missing ablations

weak baselines

contradictory results

The strongest signal is:

open code

multiple replications

real usage

Even then, trust cautiously.

How Good Researchers Actually Think

Assume the paper is wrong.

Look for cracks.

Ask:

are controls weak?

are conclusions overfitted?

are assumptions shared by everyone in the area?

The biggest opportunities appear when an entire subfield is wrong in the same way.

Progress comes from pattern recognition plus skepticism.

Conclusion

If you want to work on AI, don’t start with AI.

Start with programming.

Real programming.

Boring programming.

Most people are building castles on sand and wondering why they collapse.

Learn to think clearly.

The rest compounds.